How much did AlphaGo Zero cost?

Update (June 2020): I’ve made it to the front page of Hacker News! Thank you to the kind soul who decided to share this with the world. There is more discussion on the HN page. I wrote this in March 2018 as a reflection of what was going on with the world of AI research. As one commenter points out, a lot has changed since then with regards to efficiency of training.

One of the most exciting signs of progress in machine learning in 2017 was the emergence of AlphaGo as the best Go player in the world. In October, DeepMind published a paper describing a new version of AlphaGo, called AlphaGo Zero. After merely 36 hours of training, AlphaGo Zero had become better at Go than the version of itself that defeated Lee Sedol.

Not only that, AlphaGo Zero learned to play Go without any prior knowledge of the game (in other words, tabula rasa). By comparison, the previously published version of AlphaGo was trained with the help of a database of human Go matches.

This accomplishment is truly remarkable in that it shows that we can develop systems that teach themselves to do non-trivial tasks from a blank slate, and eventually become better than humans at doing the task. It suggests that a whole world of possibilities now are now within reach – just imagine computers that can teach themselves to do anything that a human can do.

But progress like this doesn’t come cheap. Just as human mastery of Go requires years of training, computer mastery of Go requires an enormous amount of resources. I estimate that it costs around $35 million in computing power to replicate the experiments reported in the AlphaGo Zero paper.

Analysis

AlphaGo Zero learns to play Go by simulating matches against itself in a procedure referred to as self-play. The paper reports the following numbers:

- Over 72 hours, 4.9 million matches were played.

- Each move during self-play uses about 0.4 seconds of computer thinking time.

- Self-play was performed on a single machine that contains 4 TPUs – special-purpose computer chips built by Google and available for rental on their cloud computing service.

- Parameter updates are powered by 64 GPUs and 19 CPUs, but as it turns out, the cost of these pale in comparison to the cost of the TPUs that were used for self-play.

One number that is suspiciously missing is the number of self-play machines that were used over the course of the three days1. Using an estimate of 211 moves per Go match on average, we come to a final number of 1,595 self-play machines, or 6,380 TPUs. (Calculations are below.)

At the quoted rate of $6.50/TPU/hr (as of March 2018), the whole venture would cost $2,986,822 in TPUs alone to replicate. And that’s just the smaller of the two experiments they report:

We subsequently applied our reinforcement learning pipeline to a second instance of AlphaGo Zero using a larger neural network and over a longer duration. Training again started from completely random behaviour and continued for approximately 40 days.

Over the course of training, 29 million games of self-play were generated…

The neural network used in the 40-day experiment has twice as many layers (of the same size) as the network used in the 3-day experiment, so making a single move takes about twice as much computer thinking time, assuming nothing else changed about the experiment. With this in mind, going back through the series of calculations leads us to a final cost of $35,354,222 in TPUs to replicate the 40-day experiment.

In terms of actual cost to DeepMind (a subsidiary of Google’s parent company) to run the experiment, there are other factors that need to be taken into account, such as researcher salaries, or that the quoted TPU rate probably includes a healthy amount of margin. But for someone outside Google, this number is a good ballpark estimate of how much it would cost to replicate this experiment.

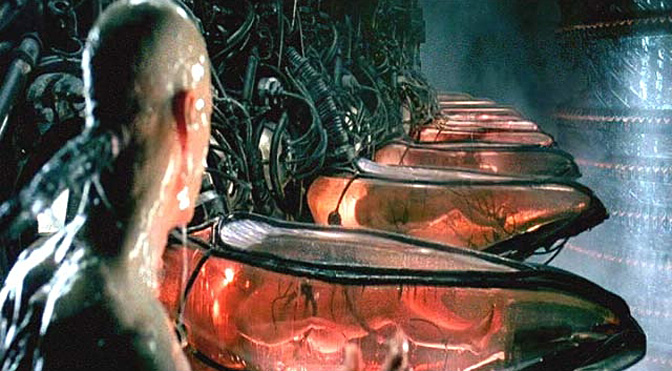

Cost in human computation (“The Matrix” metric)

Another way to look at the staggering cost of the AlphaGo Zero experiment is to imagine how many human brains would be required to provide the same amount of energy.

Given that

The power consumption of the experiment is equivalent to 12,760 human brains running continuously.

Final thoughts

This is not to say that AlphaGo Zero is not an amazing achievement (it is!). AlphaGo Zero showed the world that it is possible to build systems to teach themselves to do complicated tasks. It’s just that developing this sort of general technology is still not available to the masses.

That said, many problems that have real-world value don’t require (1) learning tabula rasa or (2) superhuman performance. Perhaps, through applying domain knowledge along with techniques presented in AlphaGo Zero, these problems can be solved for much cheaper than it would cost to create AlphaGo Zero.

Footnotes

The cost estimate calculations are below:

-

A naïve interpretation of the paper is that all the self-play matches took place on a single machine. But this doesn’t make sense, because a single machine is only capable of making [72 (hr) × 3600 (s/hr)] ÷ 0.4 (s/move) = 648,000 moves over the course of three days, whereas the paper reports that 4,900,000 matches were played. Clearly this means that there were multiple self-play machines running in parallel. ↩